INVITED SPEAKERS

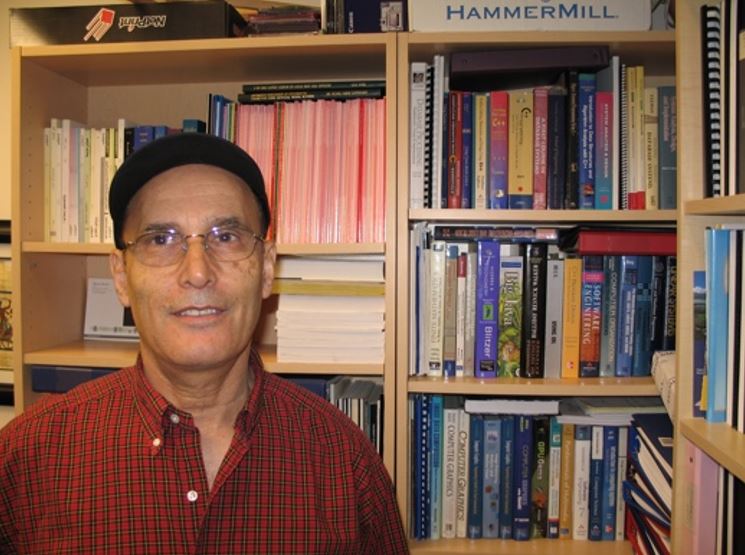

Professor Saif alZahir

Image Forensics: The Case of Image Forgery and Compression

Image Forensics: The Case of Image Forgery and Compression

Abstract

Image forensics is a branch of digital forensic science pertaining to evidence found in images such as forgery that involves a legal image case. The literature is deficient on image forgery detection and localization methods that use a fraction of the image or one of its transformed bands. In this talk, we introduce a copulas-based image quality indexes that can detect and localize forgery using a single band of the image steerable pyramid, with a high efficiency. The simulation results showed almost perfect detect of more than one type of forgeries. Also, in this “talk” we present a generic, application independent lossless compression method for images consisting of a high number of discrete colors (less than 64 layers), such as digital maps and graphs. Our simulation results attained compression down to 0.035 bits per pixel which outperformed established and published methods.

Biography

Professor Saif alZahir received his PhD and MS degrees in Electrical and Computer Engineering from the University of Pittsburgh, Pennsylvania and the University of Wisconsin-Milwaukee in 1994 and 1984 respectively. He did his postdoc research at The University of British Columbia, UBC, Vancouver, BC, Canada. Dr. alZahir is involved in research in the areas of image processing, deep learning, forensic computing, data security, Networking, Corporate Governance, and Ethics. In 2003, The Innovation Council of British Columbia, Canada, named him British Columbia Research Fellow. He authored or co-authored more than 100 articles in peer reviewed journals and conferences, two books, and 5 book chapters. He is the founder and editor-in-chief of International Journal of Corporate Governance, London, England, (2008 – present), founder and editor-in-chief of the Signal Processing: International Journal, KL, Malaysia (2009 – 2018), Associate Editor, IEEE ACCESS – CTSoc. Dr. alZahir taught at seven universities in the US, Canada, and the Middle East including University of British Columbia, UBC, the University of Northern British Columbia, UNBC, where he was promoted to Full Professor in 2011, and The University of Alaska Anchorage, UAA. Dr. alZahir was the General-Chair of the IEEE International Symposium on Industrial Electronics, ISIE, June, 2022; the General-Chair of the IEEE – International Conference in Image Processing ICIP, 2021, and the General-Chair of the IEEE Nanotechnology Materials and Devices Conference (NMDC), September, 2015. Currently, he is the General-Chair of the IEEE IV 2023 (Intelligent Vehicles) June 4-7, 2023; the General Chair of the IEEE ISSPIT in October 2023 and the General-Chair of the IEEE – International Conference in Image Processing ICIP, 2025. In addition, Dr. alZahir has served on many editorial boards and on TPCs of many conferences.

Seniha Esen Yüksel

Under the Ground, Over the Oceans and Through the Space: Exploring the World with Imaging Beyond the Visible Spectrum.

Under the Ground, Over the Oceans and Through the Space: Exploring the World with Imaging Beyond the Visible Spectrum.

Abstract

Hyperspectral cameras are specialized devices that capture hundreds of images from across the electromagnetic spectrum. This comprehensive collection allows for the identification and labeling of each pixel based on its material composition, be it grass, water, gold, cotton, and more. The wealth of information provided by hyperspectral imaging has far-reaching applications in diverse fields such as agriculture, astronomy, medical imaging, and defense. In this presentation, I will introduce our research which ventures beyond the limitations of the visible spectrum using radar and hyperspectral images, seeing under the ground, over the oceans and through the space. Additionally, I will outline our current research on deep denoising networks for hyperspectral imaging (HSI), introduce the current trends, discuss the future of HSI with the current paradigm shifts in the imaging pipeline, and touch upon the low-cost programmable cameras that put the HSI capability to our cell-phones.

Biography

Dr. Seniha Esen Yuksel received her B.Sc. from the Middle East Technical University, Department of Electrical and Electronics Engineering, Turkey in 2003; her M.Sc. degree from the University of Louisville, Department of Electrical and Computer Engineering, USA in 2005; and her Ph.D. degree from the University of Florida, Department of Computer Information, Science and Engineering, USA in 2011. Subsequently, she served as a postdoctoral researcher at the University of Florida, Department of Materials Science, and as a lecturer at the Middle East Technical University Northern Cyprus Campus. Currently, Dr. Yuksel is an associate professor at Hacettepe University, Department of Electrical and Electronics Engineering. She also serves as the director of the Pattern Recognition and Remote Sensing Laboratory (PARRSLAB), where her research focuses on machine learning and computer vision utilizing sensors that extend beyond the visible spectrum, such as hyperspectral, radar, X-ray, thermal, SAR, and LiDAR. Dr. Yuksel is recognized as an IEEE Senior member and has been honored with the BAGEP Outstanding Young Scientist Award by the Science Academy. She has contributed to the scientific community by authoring or coauthoring over 80 articles published in peer-reviewed journals and conferences.

Emre Ertin

3D SAR imaging: from sparse signal models to implicit neural representations

3D SAR imaging: from sparse signal models to implicit neural representations

Abstract

There is increasing interest in 3D reconstruction of objects from radar measurements. This interest is enabled by new data collection capabilities, in which airborne synthetic aperture radar (SAR) systems are able to interrogate a scene persistently and over a large range of aspect angles. Three-dimensional reconstruction is further motivated by an increasingly difficult class of surveillance and security challenges, including object detection and activity monitoring in urban scenes. The measurement process for SAR can be modeled as a Fourier operator with each pulse providing a 1D line segment from the 3D Spatial Fourier transform of the scene. Traditional Fourier imaging methods aggregate and index phase history data in the spatial Fourier domain collected through different apertures and apply an inverse 3D Fourier Transform. However, when inverse Fourier imaging is applied to sparsely sampled apertures, reconstruction quality is degraded due to the high sidelobes of the point spread function. In this talk we will start with a review of compressed sensing approaches that combine nonuniform fast Fourier transform methods with regularization priors for the scene such as sparsity, azimuthal persistence and vertical structures. Next, we will discuss different modeling approaches to anisotropic scattering from man-made objects to derive novel solutions to the 3D imaging problem through joint solution of the imaging problem over multiple neighboring views by exploiting the sparsity of dominant scattering centers in the scene and approximating the scattering coefficients using Gaussian basis functions in the azimuth domain. We will see that these sparse regularization methods are successful in producing point clouds consistent with the target shape. However the problem of extracting a surface representation from the point clouds remains a challenge. Recently, in computer vision, implicit deep neural representations like Neural Radiance Fields (NeRF) and its derivatives, become popular volume rendering methods. In the final part of the talk we will demonstrate how a NeRF like approach can be used to construct 3D surface representations from SAR data. We will illustrate the performance of each technique using measured and simulated datasets comprising of civilian vehicles.

Biyografi

Emre Ertin received his BS degrees in Electrical Engineering and Physics from the Bogazici University in 1992, and MSc Degree in Telecommunications and Signal Processing from the Imperial College, London, UK in 1993. In 1999, he received his PhD degree in Electrical Engineering from The Ohio State University. From 1999 to 2002, he was with the Core Technology Group at Battelle Memorial Institute. He joined the Department of Electrical and Computer Engineering of The Ohio State University in 2003, where he is currently an Associate Professor. At OSU, he served as principal investigator on NSF, AFOSR, AFRL, ARL, ARO, DARPA, IARPA and NIH funded projects on sensor signal processing, novel modalities and applications of sensor networks. He is leading one of the three research thrusts at the NIH mHealth Center for Discovery, Optimization and Translation of Temporally Precise Interventions (mDOT) aiming to develop methods, tools, and infrastructure necessary to pursue the discovery, optimization, and translation of next generation mobile Health interventions. He is the co-founder and director of technology for TegoSens, a technology startup developing radar based solutions for monitoring lung water content in congestive heart failure patients.